Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

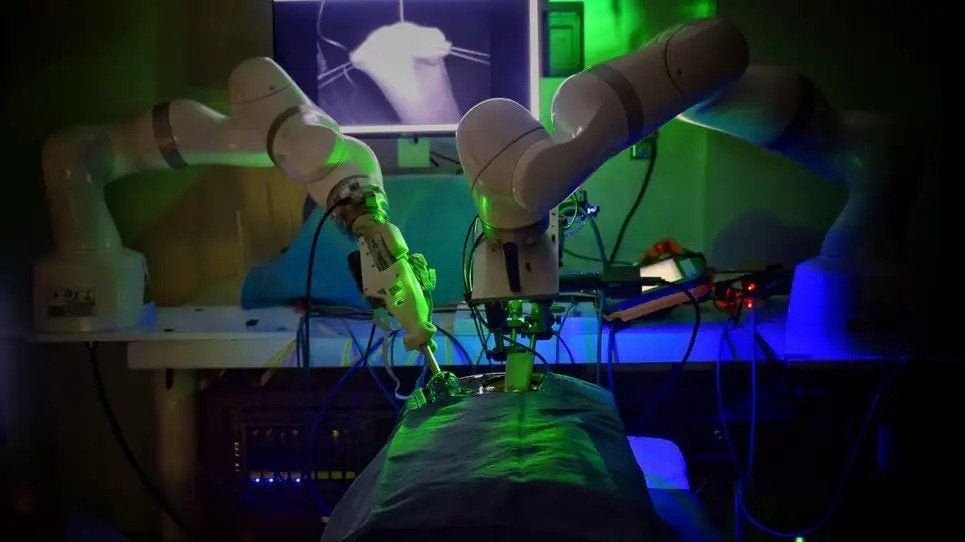

The artificial intelligence boom has already begun to penetrate the medical field with AI-based visit summaries and analysis of patient conditions. Now, new research shows how the AI training techniques used for ChatGPT can be used to train surgical robots to perform tasks on their own.

Researchers at Johns Hopkins University and Stanford University developed a training model using video recordings of human-controlled robotic arms performing surgical tasks. By learning to simulate actions on a video, the researchers believe they can reduce the need to program each individual movement required for a procedure. from The Washington Post:

The robots themselves learned to use needles, tie knots, and create suture wounds. Moreover, the trained robots went beyond mere imitation, correcting their own slip-ups without being told – for example, picking up a dropped needle. Scientists have already begun working on the next phase: combining different skills in complete surgeries performed on animal cadavers.

To be sure, robotics are being used in the surgery room now—in 2018, the “surgery on grapes” meme highlighted how robotic arms can aid in surgery by providing a higher level of precision. almost 876,000 robot-assisted surgeries was conducted in 2020. Robotic instruments can reach places and perform functions in the body where a surgeon’s hand would never fit, and they are not subject to vibration. Thin, precise devices can protect against nerve damage. But robotics are usually operated manually by a surgeon with a controller. The surgeon is always in charge.

Skeptics of more autonomous robots worry that AI models like ChatGPT aren’t “intelligent,” but simply imitate what they’ve seen before and don’t understand the underlying concepts they’re dealing with. An infinite variety of pathologies in the human host poses a challenge, so what if the AI model has never seen a particular scenario before? Can something go wrong during surgery in a split second, and what if the AI isn’t trained to react?

At a minimum, autonomous robots used in surgery must be approved by the Food and Drug Administration. In other cases where doctors are using AI to summarize and recommend their patient visits, FDA approval is not required because the doctor is technically supposed to review and approve any information they generate. This is concerning because there is already evidence that AI bots will make bad recommendationsOr hallucinate and include information in meeting transcripts that was never uttered. How often will a tired, overworked doctor rubber-stamp what an AI produces without closely scrutinizing it?

This is reminiscent of recent reports about Israeli soldiers Relying on AI to identify attack targets Without verifying the information very closely. “Soldiers poorly trained in the use of technology attacked human targets without any evidence [the AI] Prophecy at all times,” a The Washington Post the story reads “The only confirmation required at that particular time was that the target was a male.” Things can go awry when people become complacent and not sufficiently in the loop.

Healthcare is another area with high stakes—certainly more so than consumer markets. If Gmail mistakenly shortens an email, it’s not the end of the world. AI systems misdiagnosing health problems, or making mistakes during surgery, is a more serious problem. Who is responsible in that case? D post Interviewed the Director of Robotic Surgery at the University of Miami and here’s what he had to say:

“The stakes are so high,” he said, “because it’s a life and death issue.” Each patient’s anatomy is different, as a disease behaves in patients.

“I look [the images from] CT scan and MRI and then the surgery is done,” controlling the robotic arm, Parekh said. “If you want the robot to perform surgery on its own, it needs to understand how to read all the imaging, CT scans and MRIs.” Also, robots must learn how to perform keyhole, or laparoscopic, surgeries using very small incisions.

It’s hard to take seriously the idea that AI will ever be perfect when no technology is perfect. Of course, this autonomous technology is interesting from a research point of view, but the impact of an autonomous surgery conducted by an autonomous robot will be memorable. Who will you punish if something goes wrong, whose medical license is revoked? People aren’t innocent either, but at least patients have the peace of mind that they’ve gone through years of training and can be held accountable if something goes wrong. AI models are crude simulacrums of humans, sometimes behave erratically and have no moral compass.

Another concern is that relying too heavily on autonomous robots for surgery could eventually erode doctors’ own abilities and knowledge; Simplifying dating through apps makes relevant social skills rusty.

If doctors are tired and overworked — one reason the researchers suggest why this technology could be valuable — perhaps the systemic problems causing the shortages should be addressed instead. It has been widely reported that the United States has an acute shortage of doctors Increasing field accessibility. The country is on track to experience a shortage of 10,000 to 20,000 surgeons by 2036, American Association of Medical Colleges.